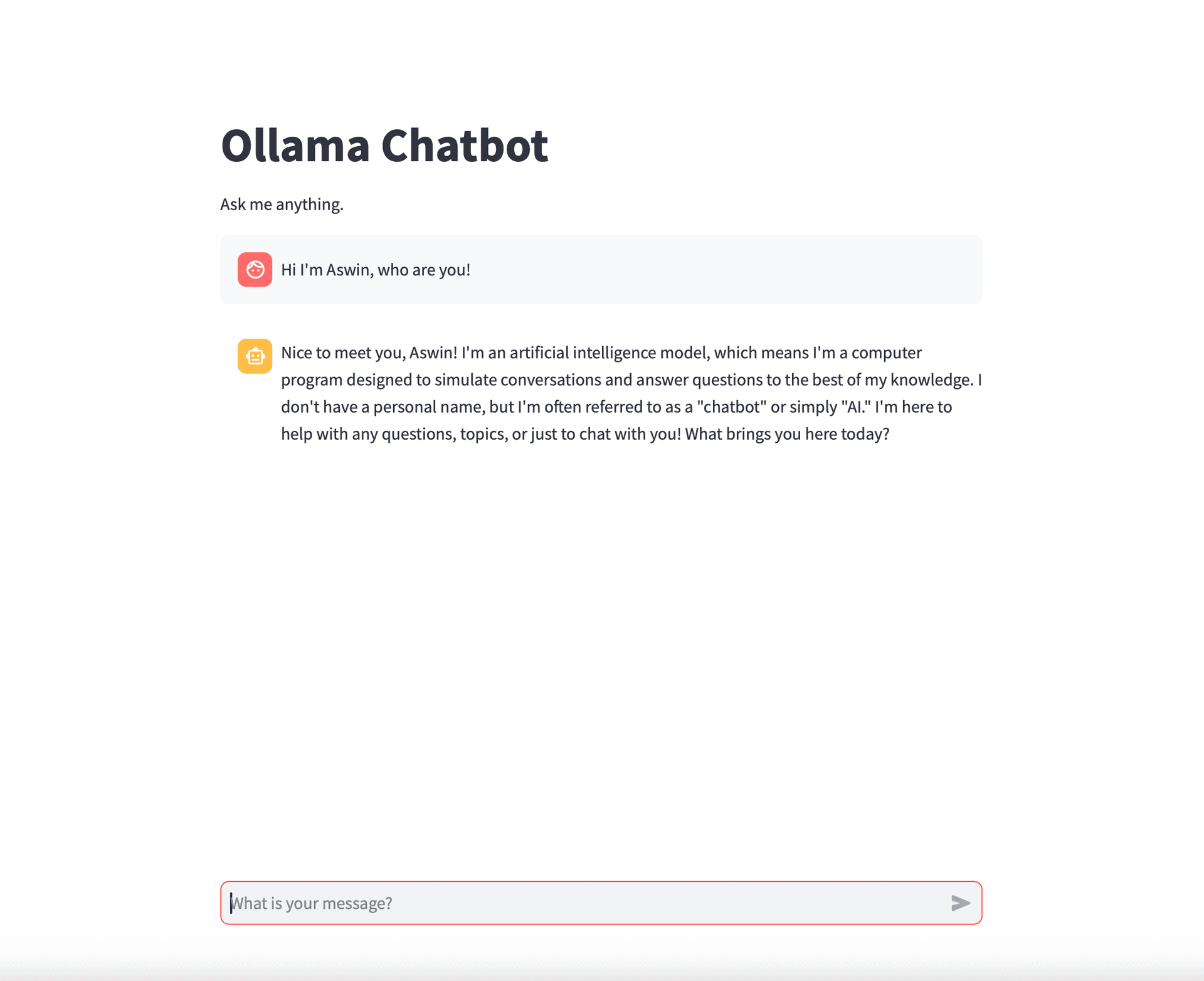

Ollama Chatbot

Super quick local implementation of a chatbot that supports streaming using Ollama (llama3.1) and Streamlit! This was around 35 lines of code, absolutely insane.

I developed this really light-weight AI chatbot project using Streamlit and Ollama, combining the power of local language models with a user-friendly web interface. As someone deeply interested in Large Language Models (LLMs) and eager to enhance my Python skills, I begain this project to create a chatbot that could run entirely on my local machine. This endeavor allowed me to dive into the fascinating world of LLMs while simultaneously improving my Python programming abilities.

The project leverages Ollama to run various open-source language models locally, while Streamlit provides an intuitive and responsive front-end for user interactions. This version that I built even features visually delaying the streaming the responses to mimic a real-time conversation. It's a great starting point for anyone interested in building their own AI-powered chatbots or exploring the potential of local AI models. The best part? The code is less than 40 lines long!

Let's break down the code and explore its key components:

- Imports and AI Response Function:

import streamlit as st

import ollama

def get_ai_response(messages):

try:

stream = ollama.chat(

model='llama3.1',

messages=messages,

stream=True,

)

for chunk in stream:

yield chunk['message']['content']

except Exception as e:

st.error(f"An error occurred: {str(e)}")

return None- Main Function:

def main():

st.title("Ollama Chatbot")

st.write("Ask me anything.")

# Initialize chat history

if 'messages' not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

if prompt := st.chat_input("What is your message?"):

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.markdown(prompt)

with st.chat_message("assistant"):

messages = st.write_stream(get_ai_response(st.session_state.messages))

st.session_state.messages.append({"role": "assistant", "content": messages})- Entry Point:

if __name__ == "__main__":

main()And that's it! With just a few lines of code, you can create your own AI chatbot using Streamlit and Ollama. Feel free to explore the code further and customize it to suit your needs.